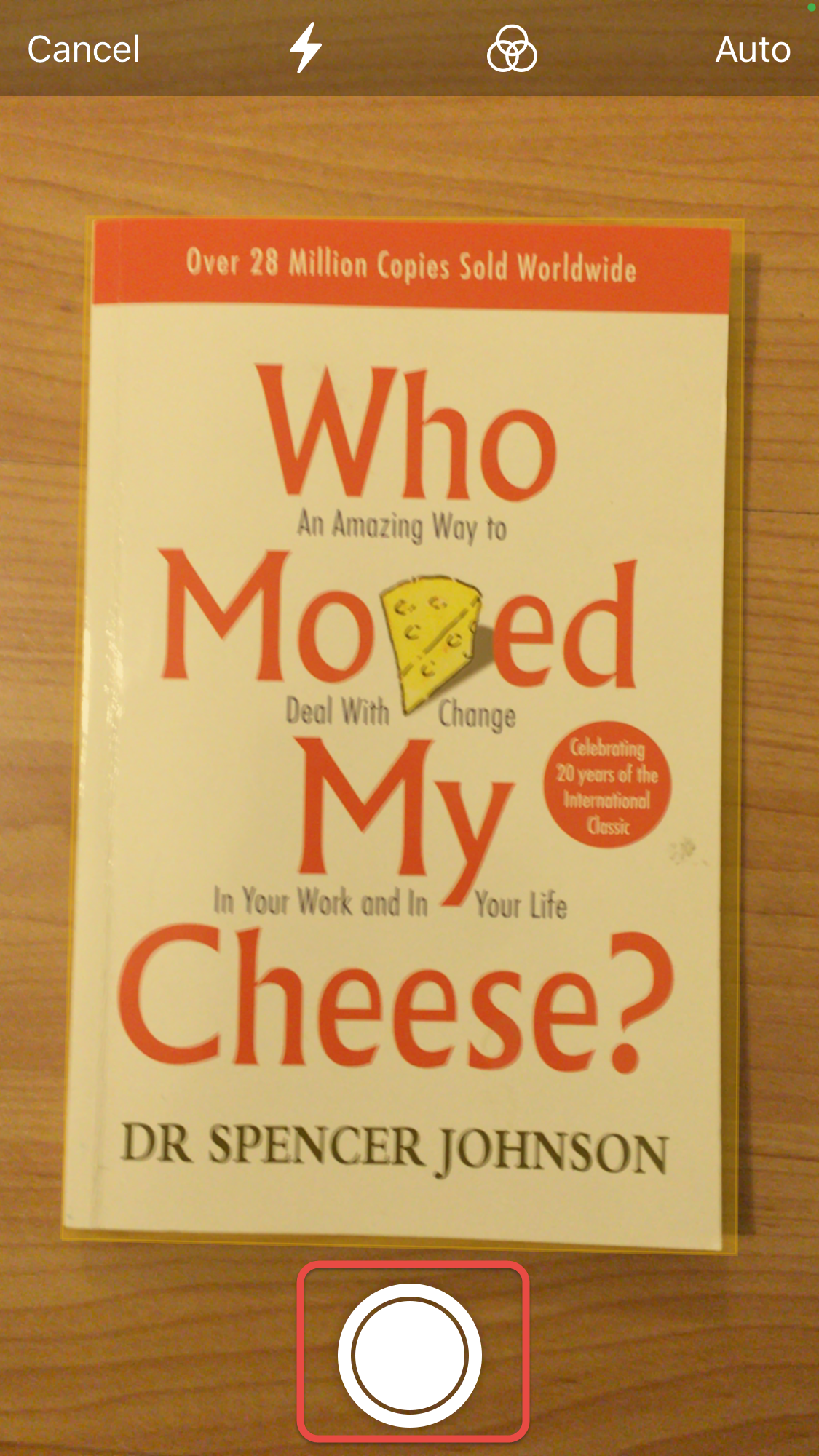

Kevés munkával és mosogatással is készíthetünk jó házikenyeret. Persze, nem lesz annyira könnyű, mint az eredeti Szabadfi Szabolcs féle kenyér (Kovászos Kenyér Sütés Szabival), de így akár 1-2 naponta süthetünk finom házikenyeret. Szabi sütési lépéseit kicsit leegyszerűsítettem, hogy másra is jusson idő a kenyér készítése közben. Sütés után kenyerünk körülbelül 1200 g-os lesz.

Hozzávalók

450 g liszt (nagy kenyérhez 700 g)

321 g víz (nagy kenyérhez 500 g)

11 g só (nagy kenyérhez 18 g)

90 g kovász (nagy keyérhez 140 g) vagy 1/3 teáskanál élesztő por

A kovász elkészítését a Kovász készítés Szabival oldalon találhatjátok.

Ha követjük az alábbi időpontokat, délelőtt 10-kor összekeverjük az anyagokat, este 6-kor friss, ropogós házikenyeret kapunk.

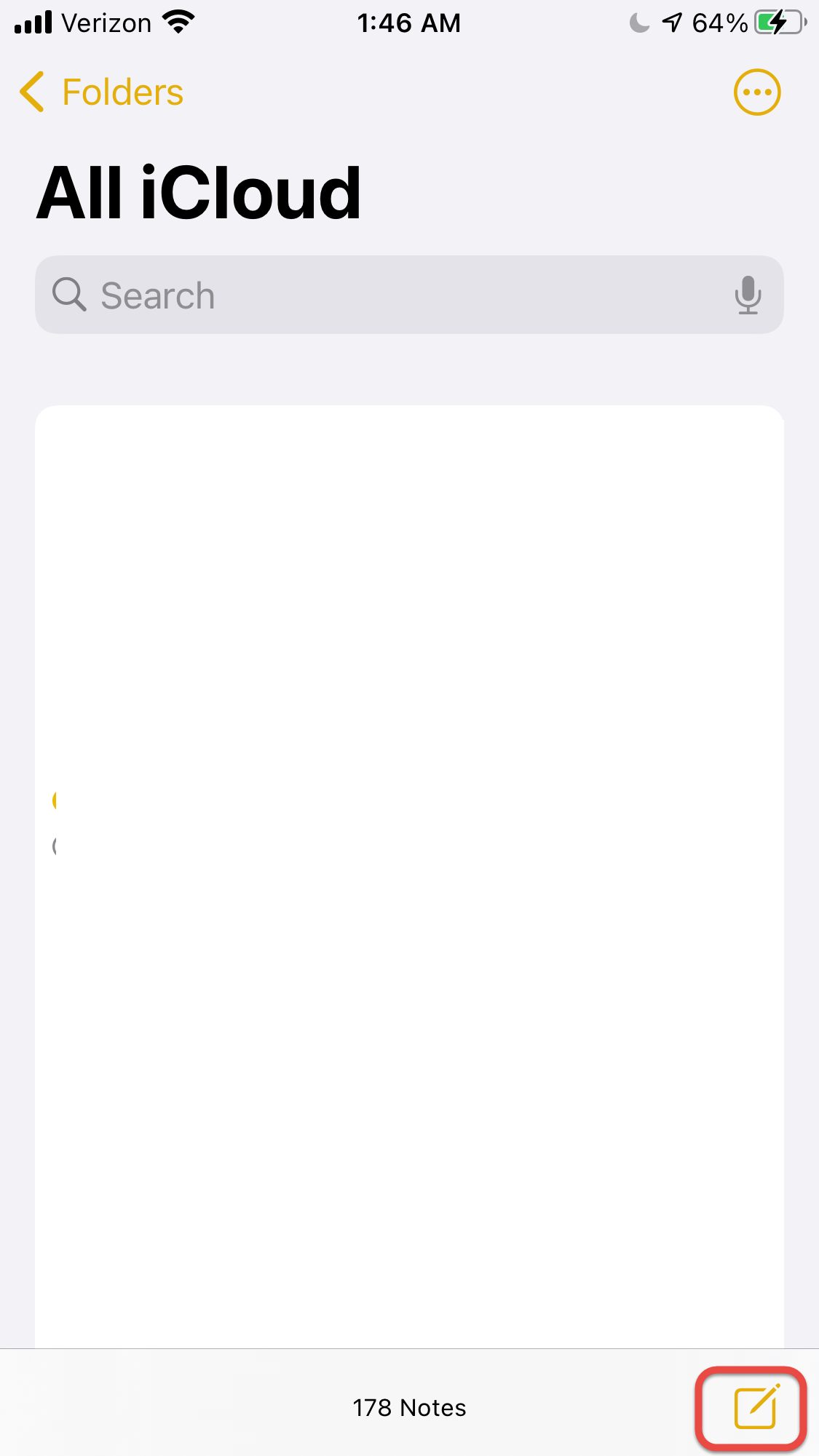

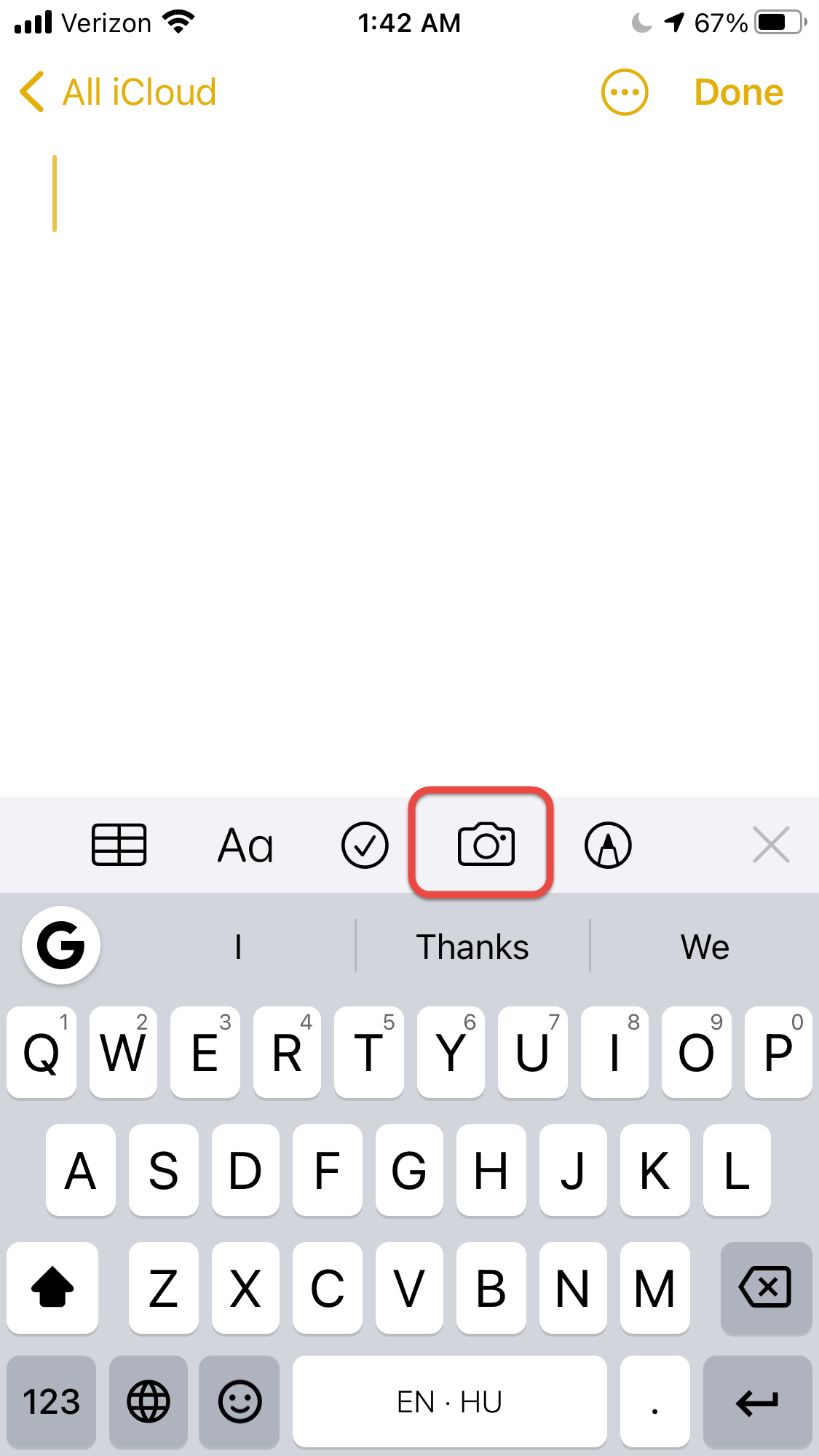

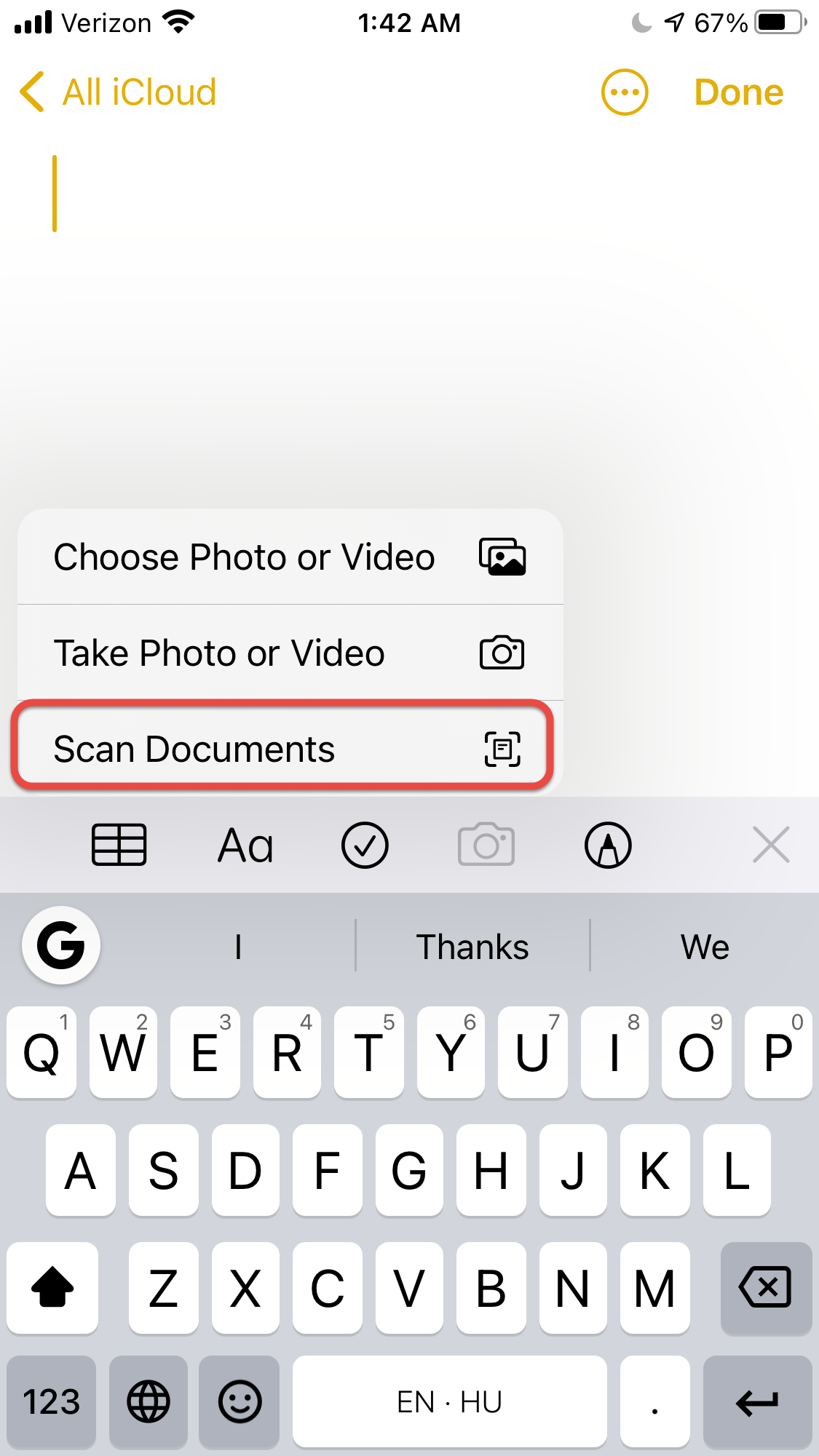

Öszefoglaló haladóknak

Nagy kenyérhez sütés előtti este etessük fel a kovászt

Ha nagy kenyeret sütsz, és a kovász a hűtőben volt, tedd ki a konyhapultra, és várj 2 órát etetés előtt, hogy felmelegedjen.

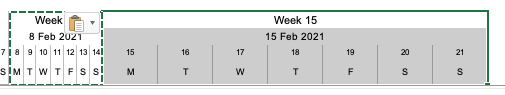

| Tevékenység | Kis (700 g) kenyérhez | Nagy (1200 g) kenyérhez |

| Ha nagy kenyeret sütsz tegyél át kovászt egy tiszta üvegbe, a többit dobd ki. | Semmi dolgod, hagyd a hűtőben éjszakára. | 50 g kovász 100 g liszt 80 g víz |

Ha nagy kenyeret sütsz, és a kovászod jó erős, vagyis 2-3 óra alatt a kétszeresére nő szobahőmérsékleten, 1 óra múlva tedd be a hűtőbe. Ha a kovásznak több idő kell, hogy a kétszeresére nőjön, hagyd kint a konyhapulton éjszakára.

A sütés napján

Ha a kovász éjszaka a hűtőben volt, 2-3 órával dagasztás előtt tedd a konyhapultra, hogy felmelegedjen és a kétszeresére nőjön.

| Kezdés időpontja | Tevékenység | Kis (700 g) kenyérhez | Nagy (1200 g) kenyérhez | Várakozás utána |

| 10:00 | Autolízis 38-40°C-os vízzel | 450 g liszt 321 g víz | 700 g liszt 500 g víz | 30 perc |

| 10:30 | Tedd el az Anya Kovászt | |||

| 10:40 | Dagasztás | 90 g kovász 11 g só | 140 g kovász 18 g só | 30 perc |

| 11:30 | 1. Hajtogatás | 30 perc | ||

| 12:00 | 2. Hajtogatás | 30 perc | ||

| 12:30 | 3. Hajtogatás | 1 óra 30 perc | ||

| 14:00 | Tedd ki a tálat a pultra | 2 óra 30 perc | ||

| 16:00 | Sütő felfűtése 250 °C-ra | 30 perc | ||

| 16:30 | Vetés Sütés 250 °C-on | 30 perc | ||

| 17:00 | Sütés 220 °C-on | 20 – 25 perc | ||

| 17:30 | Kész a kenyér |

A részletes útmutató

Előző este a kovász feletetése sütéshez

Előző este a kovászt felszaporítjuk, hogy reggelre elég legyen a kenyér kelesztéséhez. Ehhez több lisztet és vizet adunk hozzá. Lásd Kovász feletetése sütéshez.

Autolízis

- Öntsük a 38 – 40 °C vizet a dagasztótálba,

- Szitáljuk a lisztet a víz tetejére,

- A robotgép dagasztó fejével összekeverjük a lisztet a vízzel és 30 percig állni hagyjuk. Ez a lépés segíti a lisztet, hogy felvegye a hozzáadott vizet.

Tegyük el az Anya Kovászt

A feletetett kovászból elteszünk 50 g-ot egy tiszta üvegben, hogy a jövőben is tudjunk kenyeret sütni belőle, a maradék 140 g-ot a mai kenyér sütéséhez fogjuk használni. Lásd Tegyük el az Anya Kovászt

Dagasztás

- Tegyük a kovászt vagy élesztőt a liszt tetejére,

- 1 percig dagasszuk a robotgéppel,

- Lassan adjuk hozzá a sót, hogy jól elkeveredjen,

- Újabb 1 percig dagasszuk a konyhai robotgéppel,

- Tegyük a tésztát 1 kávéskanál olajjal kikent tálba, fedjük le.

Ha hideg a konyhánk, tegyük 30 °C ( 86 °F ) -os sütőbe. A legegyszerűbb, ha a kikapcsolt sütő ajtaját kissé nyitva hagyjuk és a lámpával felmelegítjük. Ha a sütő elérte a kívánt hőmérsékletet, csukjuk be az ajtaját és kapcsoljuk ki a lámpát.

Kelesztés melegben

A kenyeret 3 órán át kelesztjük langyos helyen.

A kelesztés elején háromszor félóránként a tálban meghajtogatjuk.

- Óvatosan húzzuk fel a tészta szélét és hajtsuk rá a tetejére.

- Forgassuk el a tálat 180 fokkal és a szemben levő oldalon is húzzuk fel a tészta szélét és hajtsuk rá a tetejére

- Forgassuk el a tálat 90 fokkal és ismételjük meg a nyújtást és hajtást másik két oldalon is

- Letakarjuk a tálat, visszatesszük a langyos sütőbe.

A harmadig hajtogatás után még 1 óra 30 percig a langyos sütőben kelesztjük.

Kelesztés a pulton

Az összesen 3 óra meleg kelesztés elteltével a pultra tesszük a tálat, levesszük a fedelét és egy konyharuhával letakajuk. 2 óra 30 percet kelesztjük a pulton.

Sütés előkészítése

A tészta kész a sütésre, ha megnőtt, és levegővel telített lett.

A kenyeret gőzben sütjük, hogy megnőjön és szép cserepes legyen a héja. Keressünk egy olyan vas, kerámia, vagy jénai edényt amely hőálló és lehetőleg van fedele. Ha nincs az edénynek fedele, akkor letakarhatjuk alumínium fóliával is.

- Tedd be azt az edényt a hideg sütőbe, amiben a kenyeret fogod sütni.

- Ha az edénydenek nincs fedele, és nem takarod le alufóliával, tegyél be egy lábost a sütő aljába vízzel, hogy gőz képződjön a sütőben.

- Melegítsd fel a sütőt az edénnyel együtt 250 °C ( 480 °F ) -ra.

Vetés

- Tegyünk be egy hőálló vasfazekat, kerámia, vagy üvegtálat a hideg sütőbe és melegístük fel a sütőt 250 °C ( 480 °F )-ra.

- Amikor a sütő felmelegedett,

- borítsuk a tésztát egy lisztezett vágódeszkára,

- hajtogassuk fel a tészta széleit, hogy feszes legyen,

- csúnya oldalával lefelé tegyük sütőpapírra,

- tegyük bele a forró tálba,

- éles késsel vágjuk be a tészta tetejét, hogy nőni tudjon, és ott repedjen meg,

- takarjuk le a tálat a fedelével, vagy alufóliával.

- 250 °C ( 480 °F ) -on letakarva süssük 30 percig

Sütés félidő

- Vegyük ki a kenyeret a tálból és tegyük a sütő rácsára sütőpapír nélkül

- Ha a kenyér alja már sokkal barnább, mint a teteje, tegyünk alá alufóliát, hogy az alja ne égjen meg

- Csökkentsük a sütő hőmérséketét 220 °C ( 430 °F ) -ra

- Süssük 20 – 25 percig, amíg a kenyér tetején a repedés széle szenesedni kezd, hogy a belseje is jól átsüjlön.