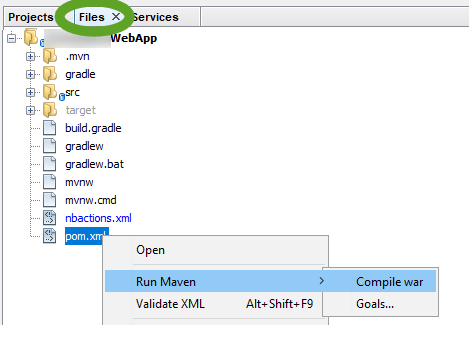

Artifactory supports multiple repository types, Docker is one of them. To create a new Docker repository in Artifactory

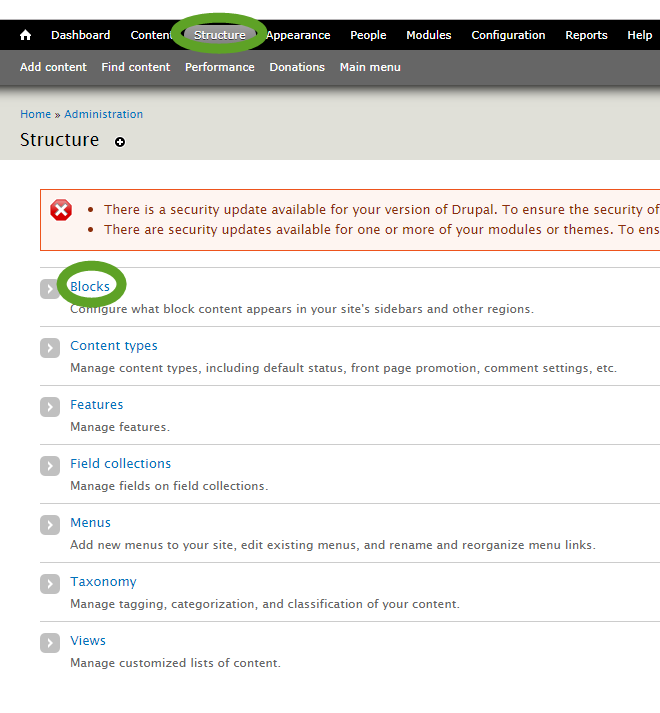

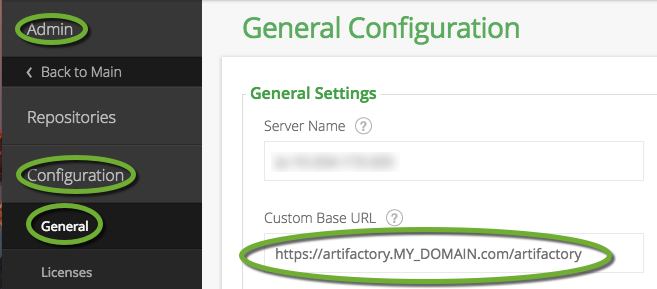

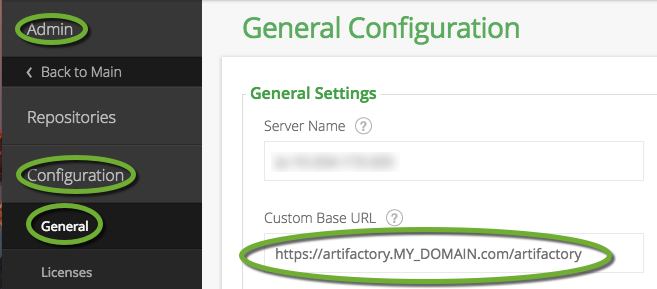

Set the Custom Base URL of the Artifactory server

From the Artifactory documentation at https://www.jfrog.com/confluence/display/RTF/Configuring+NGINX

“When using an HTTP proxy, the links produced by Artifactory, as well as certain redirects contain the wrong port and use the http instead of https”

- On the left side select Admin,

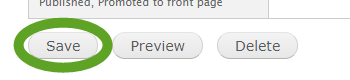

- Under Configuration on the General page enter the actual URL you use to access the Artifactory server and click the Save button.

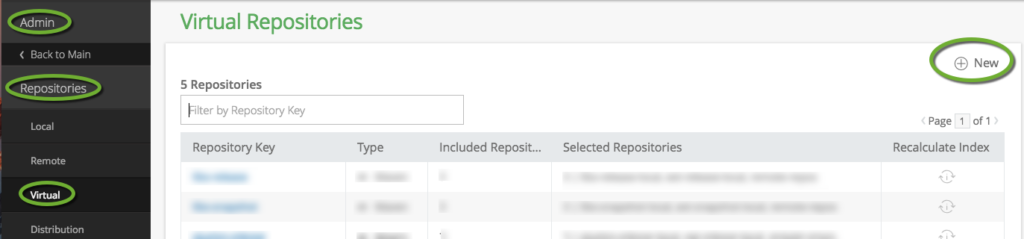

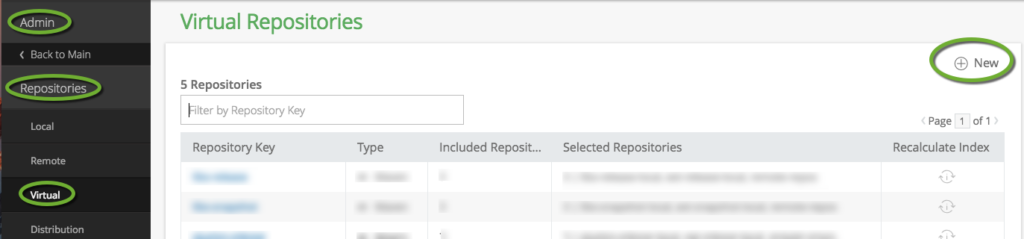

Create one virtual Docker repository for all of your Docker repositories

As recommended by the Artifactory documentation at https://www.jfrog.com/confluence/display/RTF/Configuring+a+Reverse+Proxy#ConfiguringaReverseProxy-DockerReverseProxySettings

create one virtual Docker repository to aggregate all other Docker repositories, so only this repository has to be set up on the reverse proxy server.

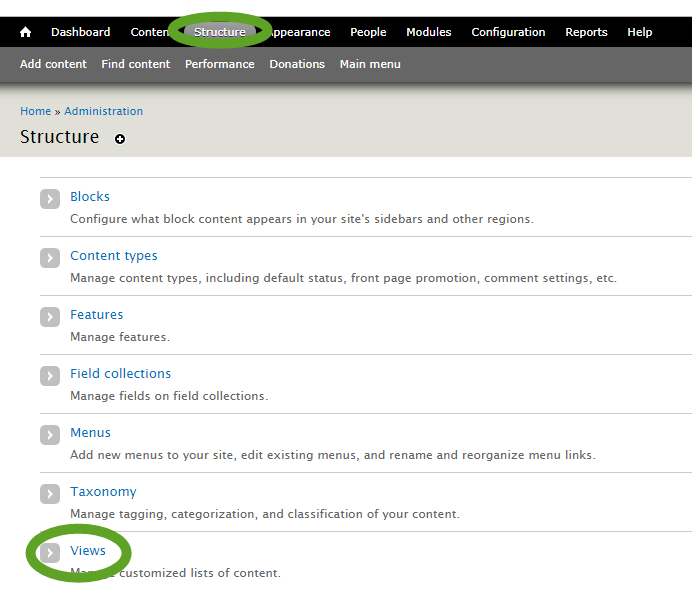

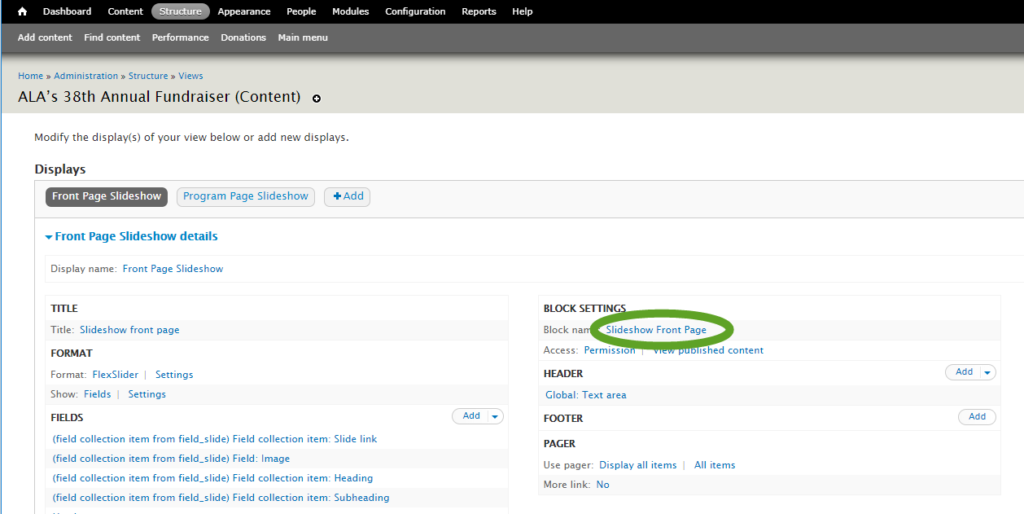

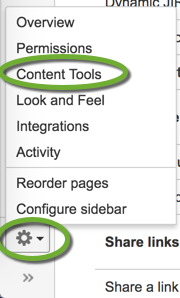

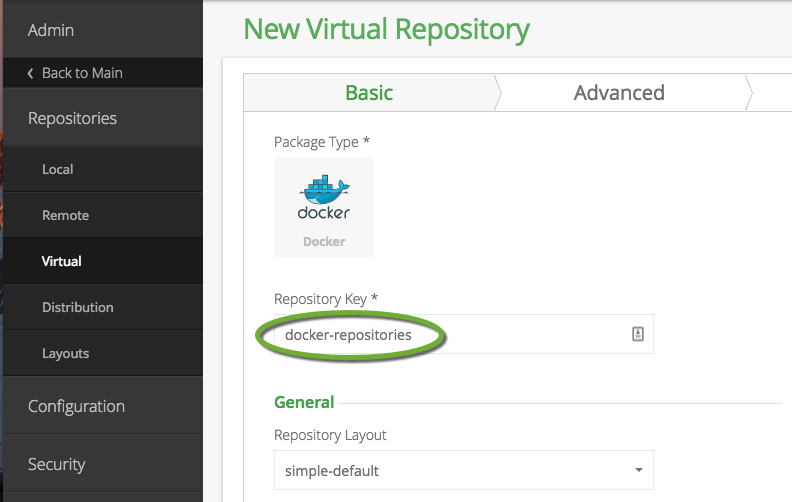

- On the left side select Admin,

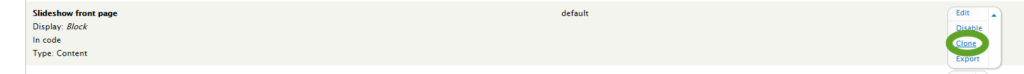

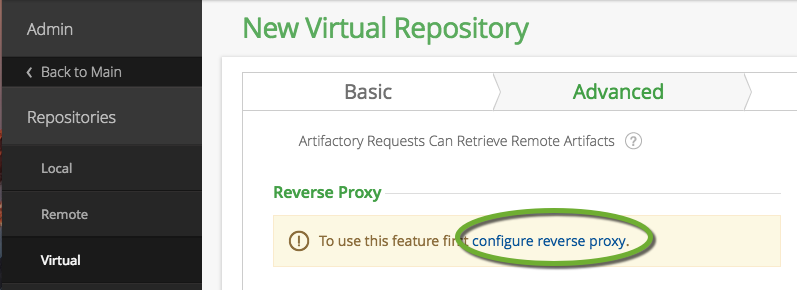

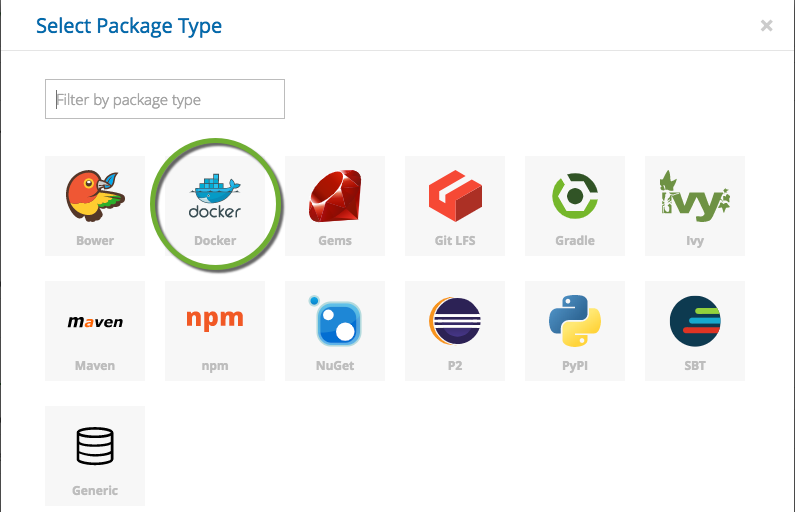

- In the Repositories section on the Virtual page click New,

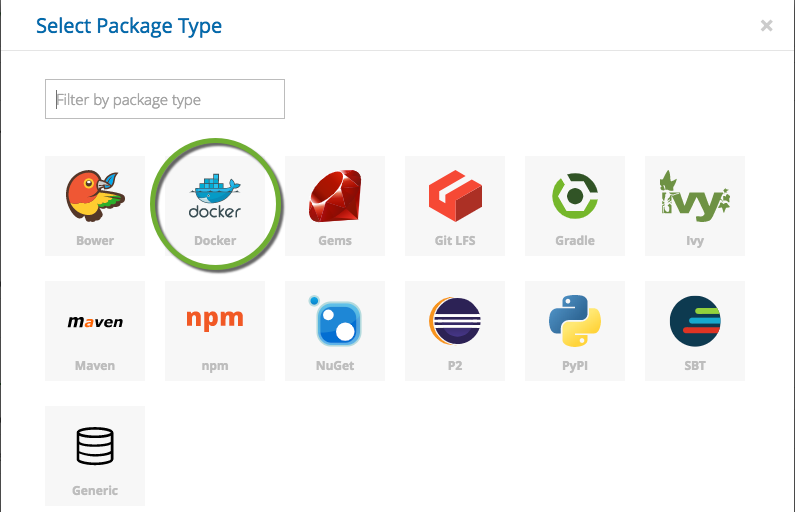

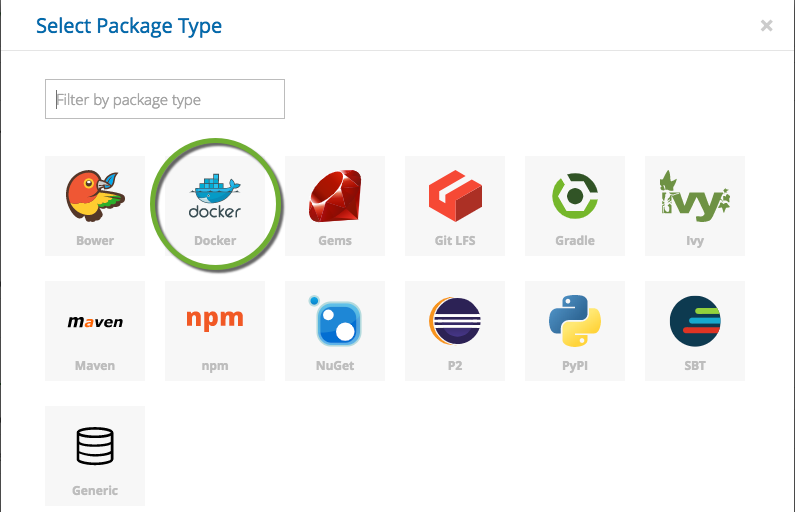

- Select the Docker package type,

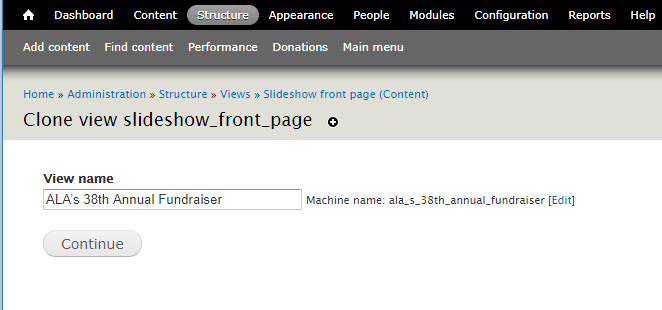

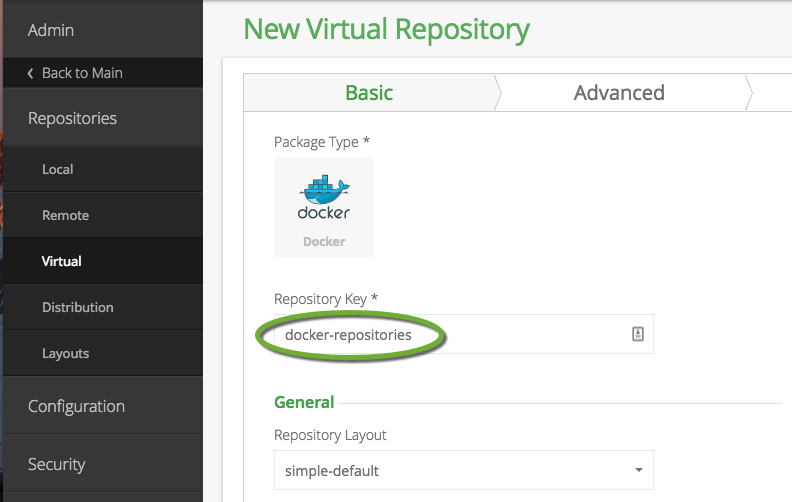

- Enter a name for the repository,

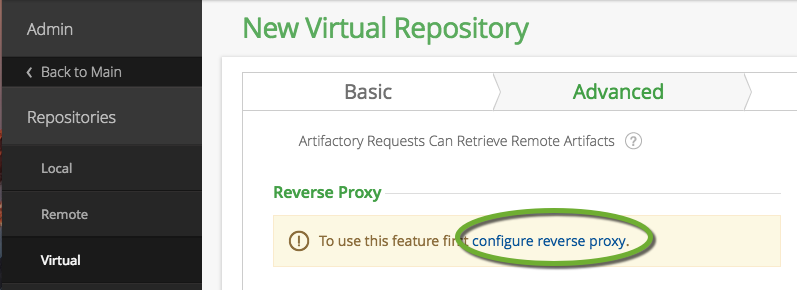

- Command-click (CTRL-click on Windows) the configure reverse proxy link to open it in a new tab and generate the script to set up the reverse proxy server,

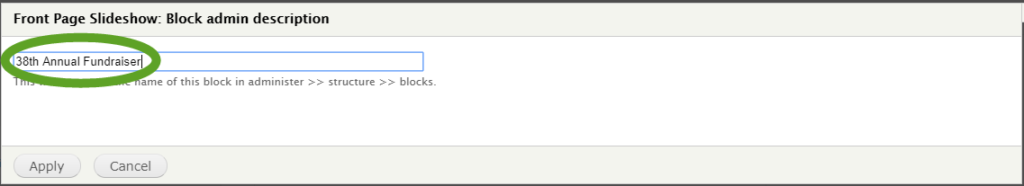

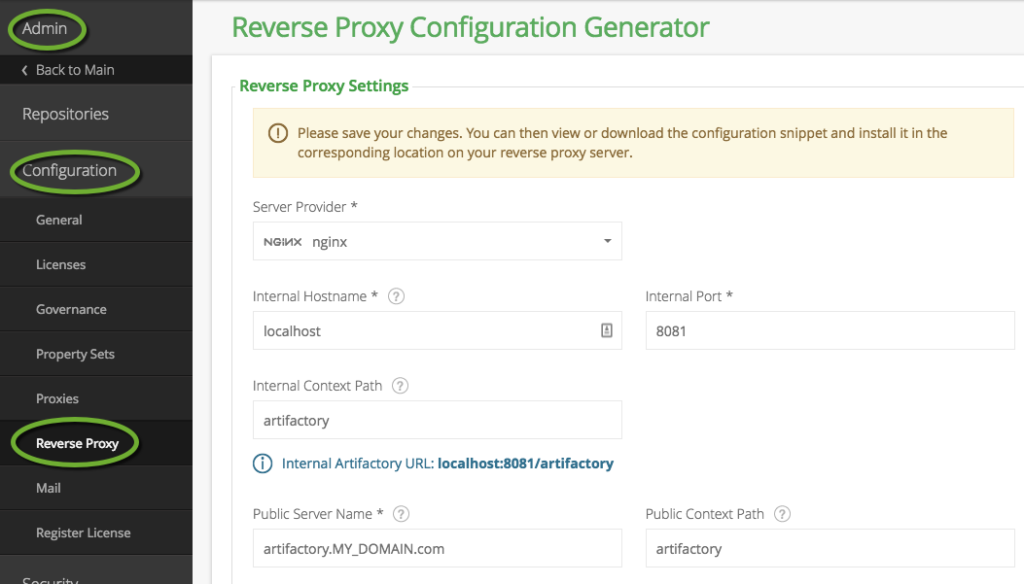

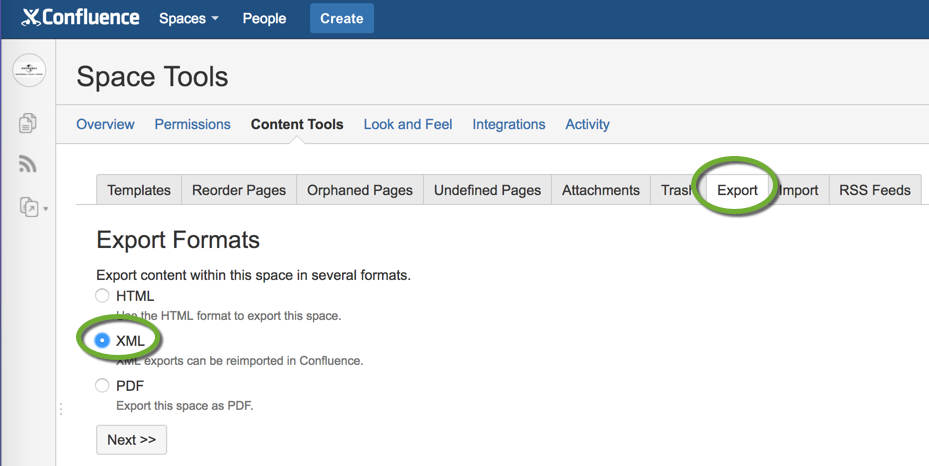

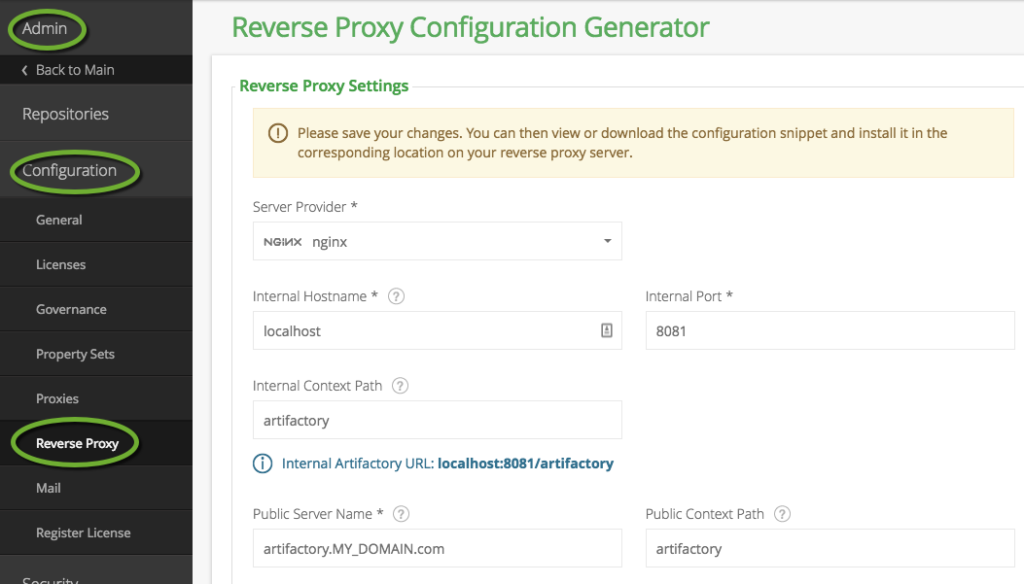

Create the reverse proxy script

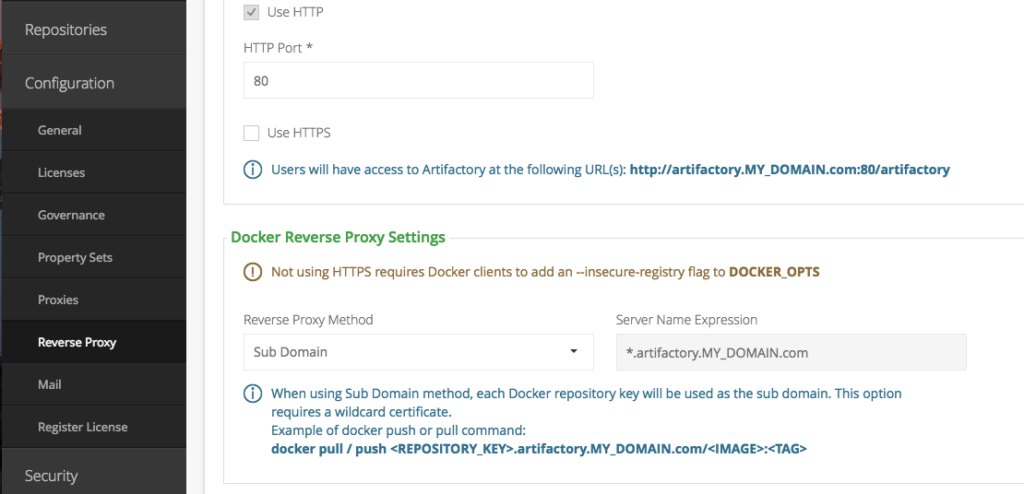

- In the Configuration section on the Reverse Proxy page fill out the form. If the reverse proxy server will be installed on the Artifactory server, write localhost in the Internal Hostname field.

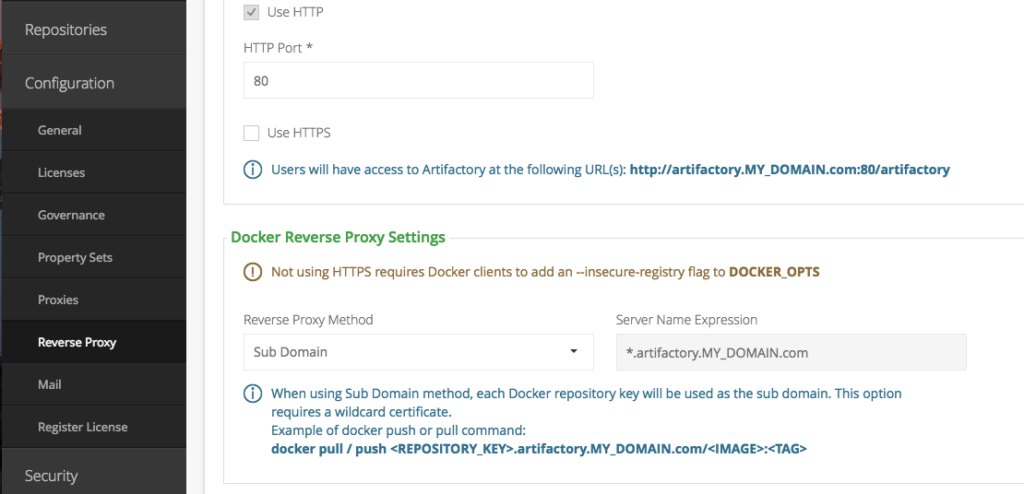

If you use a load balancer in front of the Artifactory server that also contains the SSL certificate you don’t need to enable the HTTPS protocol. If you use wildcard certificate you can select the Sub Domain reverse proxy method.

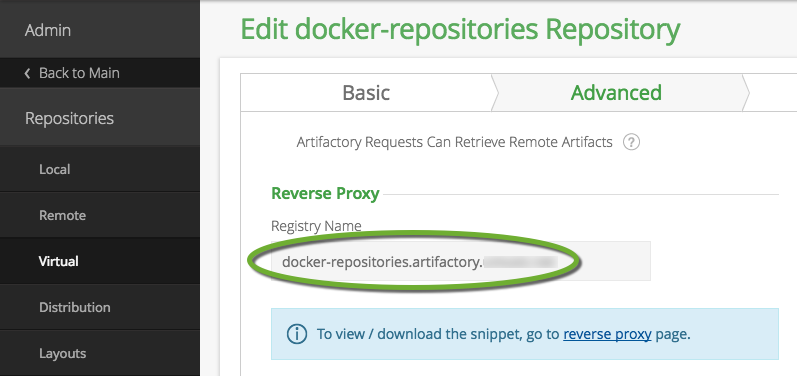

Save the Virtual repository

- On the New Virtual repository tab click Next at the bottom of the page,

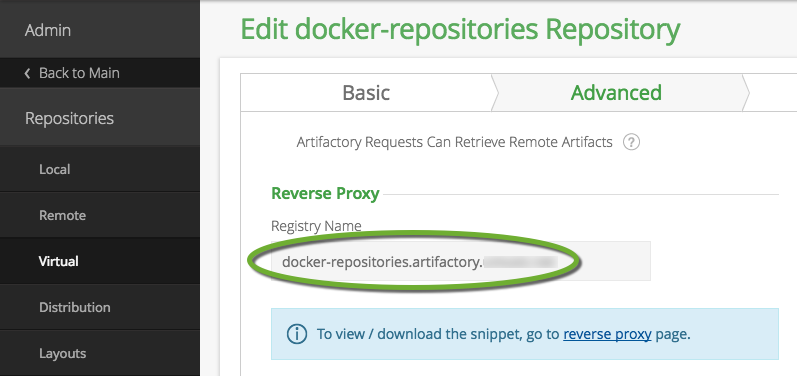

- The Advanced tab shows the name of the Docker Registry

- Click the Save & Finish button to create the repository.

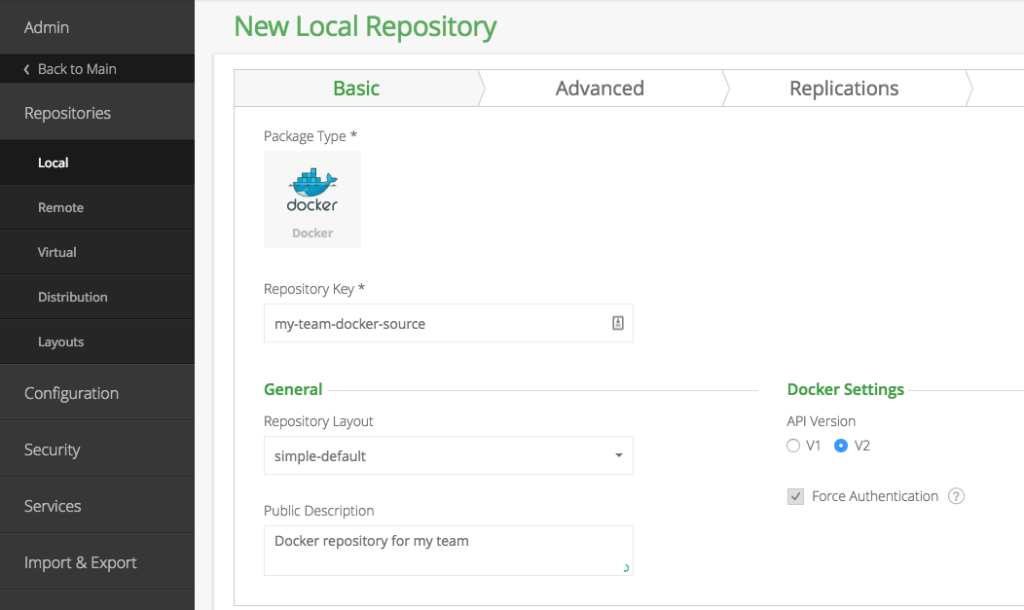

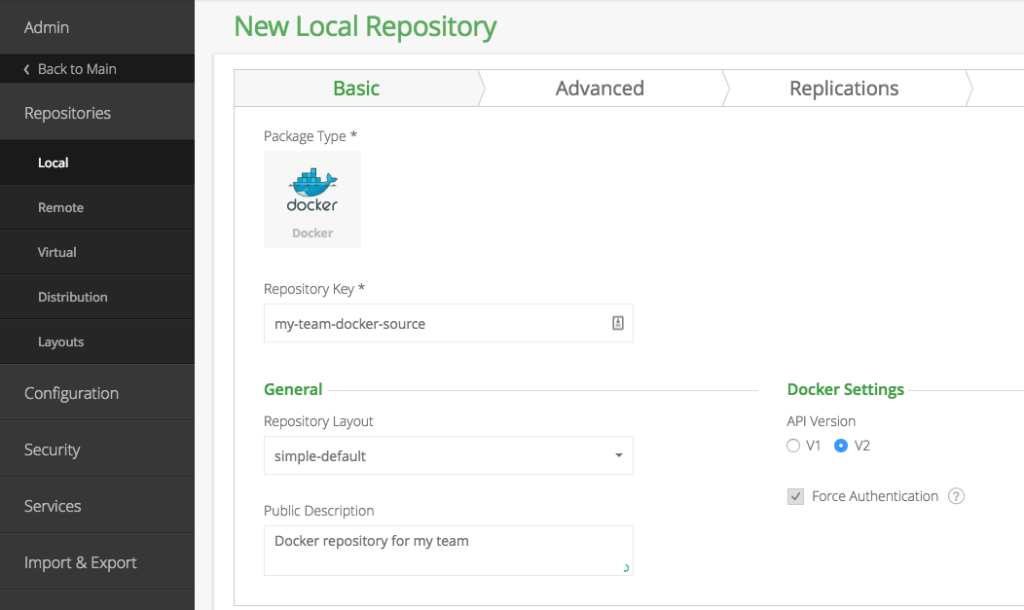

Create the Docker local repository

The local Docker repository will store the Docker images

- On the left side select Admin,

- In the Repositories section on the Local page click New,

- Select the Docker package type,

- Enter a name for the repository and click Next,

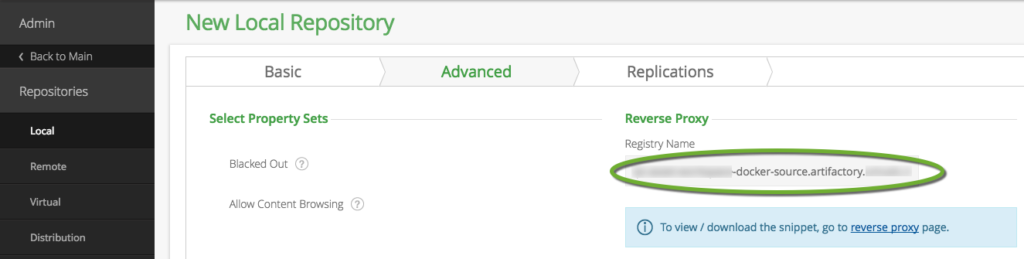

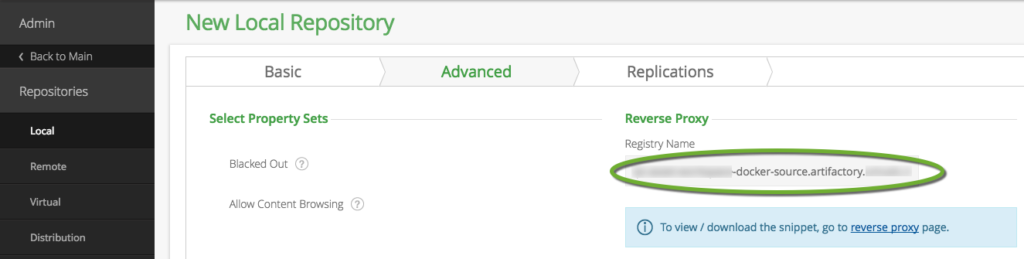

- The Advanced tab shows the address of the repository using the reverse proxy,

- Click the Save & Finish button to create the repository.